|

Professor: Andrea Bajcsy (abajcsy [at] cmu [dot] edu)

Lecture Time: Mon & Wed, 11:00 - 12:20 pm

Syllabus: PDF

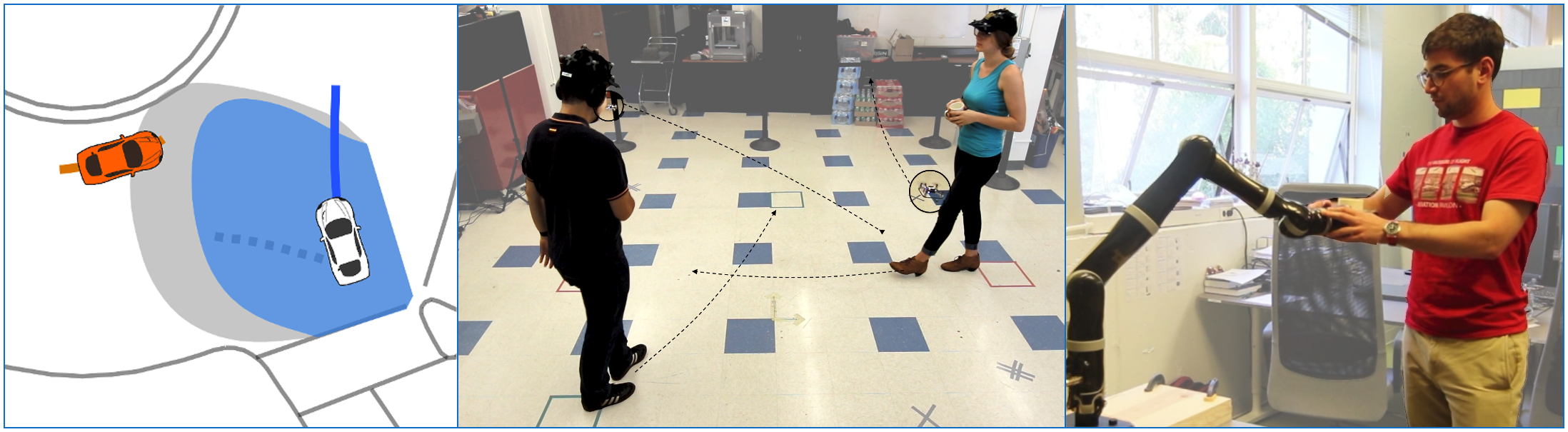

OverviewRobot deployment around real people is rapidly accelerating: autonomous cars navigate through crowded cities on a daily basis, assistive robots increasingly help end-users with daily living tasks, and large teams of human engineers interactively teach robots basic skills. However, robot interaction with humans requires us to re-evaluate the assumptions built into all components of our autonomy algorithms, from decision-making, to machine learning, to safety analysis. In this graduate seminar class, we will build the mathematical foundations for modeling human-robot interaction, develop the tools to analyze the safety and reliability of robots deployed around people, and investigate algorithms for robot learning from human data. The approaches covered will draw upon a variety of tools such as optimal control, dynamic game theory, Bayesian inference, and modern machine learning. Throughout the class, there will also be guest lectures from experts in the field. Students will practice essential research skills including reviewing papers, writing research project proposals, and technical communication. |

|

|

Schedule (tentative)

| Date | Topic | Info |

|---|---|---|

| Week 1 Mon, Jan 15 |

No Class (MLK Day) | |

| Week 1 Wed, Jan 17 |

Lecture Introduction |

Materials: Slides |

| Week 2 Mon, Jan 22 |

Lecture Dynamical systems model of interaction |

Materials: Notes |

| Week 2 Wed, Jan 24 |

Lecture Optimal control & decision-making |

Materials: Notes |

| Week 3 Mon, Jan 29 |

Lecture Multi-agent games & robust optimal control |

Further reading:

Materials: Notes |

| Week 3 Wed, Jan 31 |

Lecture Safety Analysis I |

Further reading:

Materials: Notes |

| Week 4 Mon, Feb 5 |

Lecture Safety Analysis II |

Due Project Proposal

Materials: Notes |

| Week 4 Wed Feb 7 |

Paper discussion Computationally scalable safety |

Required reading:

Further reading:

|

| Week 5 Mon, Feb 12 |

Guest Lecture Jason Choi (UC Berkeley) |

Title: Safety Filters for Uncertain Dynamical Systems: Control Theory & Data-driven Approaches Abstract: Safety is a primary concern when deploying autonomous robots in the real world. Model-based controllers designed to ensure safety constraints often fail due to model uncertainties present in real physical systems. Providing practical safety guarantees for uncertain systems is a significant challenge, which will be the main focus of this talk. In the first part of the talk, I will review three of the most popular methods for implementing safety filters in general nonlinear systems—Hamilton-Jacobi Reachability, Control Barrier Functions, and Model Predictive Control. The theories underlying each of these methods are well-established for systems with good mathematical models, and have been extended to account for the uncertainties of real-world systems. I will discuss the strengths, drawbacks, and connections of each method. In the second part of the talk, I will discuss how data-driven methods can help resolve the challenge. I will provide an overview of various data-driven safety filters developed during my PhD studies. Finally, I will explore remaining open research problems in addressing safety effectively for real-world robot autonomy. Materials: Recording Further Reading:

|

| Week 5 Wed, Feb 14 |

Paper discussion Safety filtering around humans |

Required reading: |

| Week 6 Mon, Feb 19 |

Lecture Human prediction |

Due Homework Further Reading:

Materials: Notes |

| Week 6 Wed, Feb 21 |

Guest Lecture Lasse Peters (TU Delft) |

Title: Game-Theoretic Models for Multi-Agent Interaction Abstract: When multiple agents operate in a common environment, their actions are naturally interdependent and this coupling complicates planning. In this lecture, we will approach this problem through the lens of dynamic game theory. We will discuss how to model multi-agent interactions as general-sum games over continuous states and actions, characterize solution concepts of such games, and highlight the key challenges of solving them in practice. Based on this foundation, we will review established techniques to tractably approximate game solutions for online decision-making. Finally, will discuss extensions of the game-theoretic framework to settings that involve incomplete knowledge about the intent, dynamics, or state of other agents. Materials: Recording Further Reading:

|

| Week 7 Mon, Feb 26 |

Lecture Human prediction: Data-driven |

Further Reading:

Materials: Notes |

| Week 7 Wed, Feb 28 |

Guest Lecture Dr. Boris Ivanovic (NVIDIA) |

Title: Behavior Prediction as a Nucleus of Modern AV Research Abstract: Research on behavior prediction, the task of predicting the future motion of agents, has had an outsized impact on multiple aspects of autonomous vehicles (AVs). From direct improvements in online driving performance to deeper connections between AV stack modules to enabling closed-loop training and evaluation in simulation with intelligent reactive agents, behavior prediction has served as a nucleus for much of modern AV research. In this lecture, I will discuss recent advancements along each of these directions, covering modern approaches for behavior prediction, generalization to unseen environments, tighter integrations of AV stack components (towards end-to-end AV architectures), and methods for simulating the behaviors of agents. Finally, I will outline some open research problems in modeling human motion and their potential impacts on downstream driving performance. Materials: Recording |

| Week 8 Mon, Mar 4 |

No Class (Spring Break) | |

| Week 8 Wed, Mar 6 |

No Class (Spring Break) | |

| Week 9 Mon, Mar 11 |

Paper discussion Embedding human models into safety I |

Required Reading: |

| Week 9 Wed, Mar 13 |

Guest lecture Prof. David Fridovich-Keil (UT Austin) |

Title: Inverse games: an MPEC by any other name… Abstract: This lecture will introduce mathematical programs with equilibrium constraints (MPECs), and show how they encompass an “inverse” variant of mathematical games in which parameters of players’ costs and constraints must be inferred from data, or designed to yield specific equilibrium outcomes. We will begin with a brief review of the fundamentals of constrained optimization, and discuss how these familiar concepts appear in inverse games and the implications for designing efficient solution methods. The lecture will conclude with a review of several recent papers that present new developments in this space. Materials: Recording |

| Week 10 Mon, Mar 18 |

Paper discussion Embedding human models into safety II | Due Mid-term Report Required Reading: |

| Week 10 Wed, Mar 20 |

Lecture Sources of human feedback |

Further Reading:

Materials: Slides |

| Week 11 Mon, Mar 25 |

Lecture Reliably learning from human feedback |

Further Reading:

|

| Week 11 Wed, Mar 27 |

Paper discussion Reinforcement learning from human feedback |

Required Reading:

Further Reading:

|

| Week 12 Mon, Apr 1 |

Guest Lecture Prof. Sanjiban Choudhury (Cornell) |

Title: To RL or not to RL Abstract: Model-based Reinforcement Learning (MBRL) and Inverse Reinforcement Learning (IRL) are powerful techniques that leverage expert demonstrations to learn either models or rewards. However, traditional approaches suffer from a computational weakness: they require repeatedly solving a hard reinforcement learning (RL) problem as a subroutine. This requirement presents a formidable barrier to scalability. Is the RL subroutine necessary? After all, if the expert already provides a distribution of “good” states, does the learner really need to explore? In this work, we demonstrate an informed MBRL and IRL reduction that utilizes the state distribution of the expert to provide an exponential speedup in theory. In practice, we find that we are able to significantly speed up over prior art on continuous control tasks. Materials: Recording |

| Week 12 Wed, Apr 3 |

Paper discussion Alignment |

Required Reading:

Further Reading:

|

| Week 13 Mon, Apr 8 |

Paper discussion Learning constraints from demonstration |

Required Reading: |

| Week 13 Wed, Apr 10 |

Paper discussion Latent-space safety |

Required reading: |

| Week 14 Mon, Apr 15 |

Guest Lecture Prof. Aditi Raghunathan (CMU) |

Talk Title: Robust machine learning with foundation models Abstract: In recent years, foundation models—large pretrained models that can be adapted for a wide range of tasks—have achieved state-of-the-art performance on a variety of tasks. While the pretrained models are trained on broad data, the adaptation (or fine-tuning) process is often performed on limited data. As a result, the challenges of distribution shift, where a model is deployed on a different distribution as the fine-tuning data remain, albeit in a different form. This talk will provide some concrete instances of this challenge and discuss some principles for developing robust approaches. |

| Week 14 Wed, Apr 17 |

Lecture What is safety in interactive robotics? |

Further Reading:

Materials: Slides |

| Week 15 Mon, Apr 22 |

Final presentations |

Due Slides uploaded to Canvas Apr. 21, 11:59pm ET

Presenters: Bowen Jiang, Yilin Wu, Weihao (Zack) Zeng, Samuel Li, Sidney Nimako – Boateng, Xilun Zhang |

| Week 15 Wed, Apr 24 |

Final presentations | Due Final report uploaded to Canvas on May 1, 11:59pm ET

Presenters: Jehan Yang, Eliot Xing, Yumeng Xiu, Kavya Puthuveetil |

|

|